Last Updated on October 15, 2022

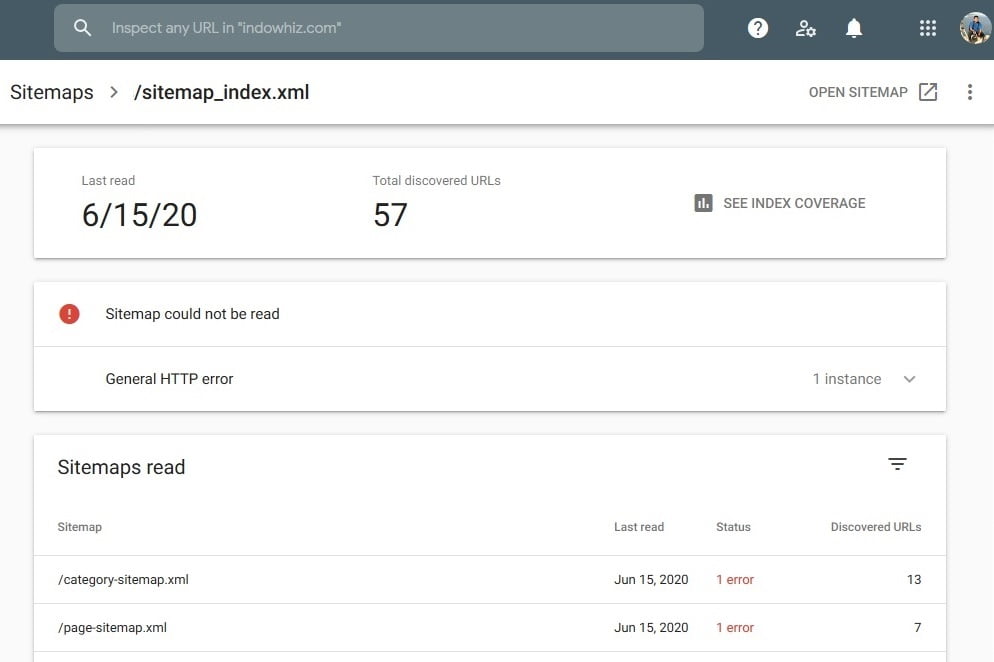

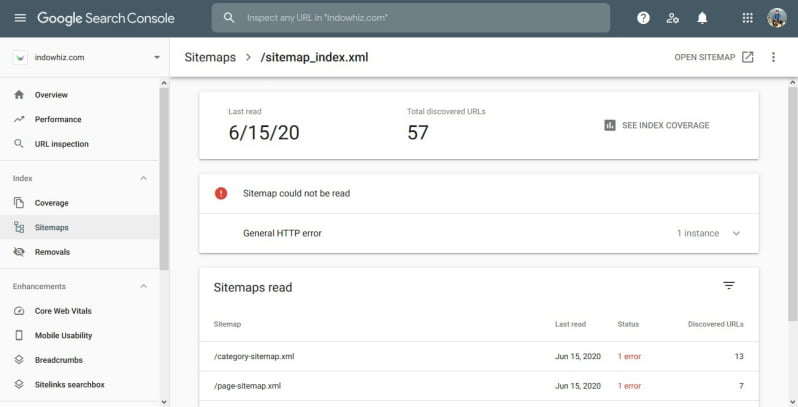

A sitemap is an important tool to aid search engines (e.g., Google, Bing, Yandex) in indexing your web pages. Generally, search engines recognize several common sitemap formats, including XML, RSS, Atom, or TXT [1]. Usually, you need to prior register your sitemap in the Webmaster Tool (e.g., Google Search Console), before they can start indexing your web pages.

However, registering a sitemap is not 100% error-free. Website administrators may experience unexpected things. For example, a sitemap cannot be accessed or read by Webmaster Tools on some search engines. Many factors can cause this issue, including the sitemap problem or unreadable due to a 403 error (access denied).

Note: the 403 error (access denied), means that the search engine does not have permission to access your sitemap page.

1. Checking access to the sitemap

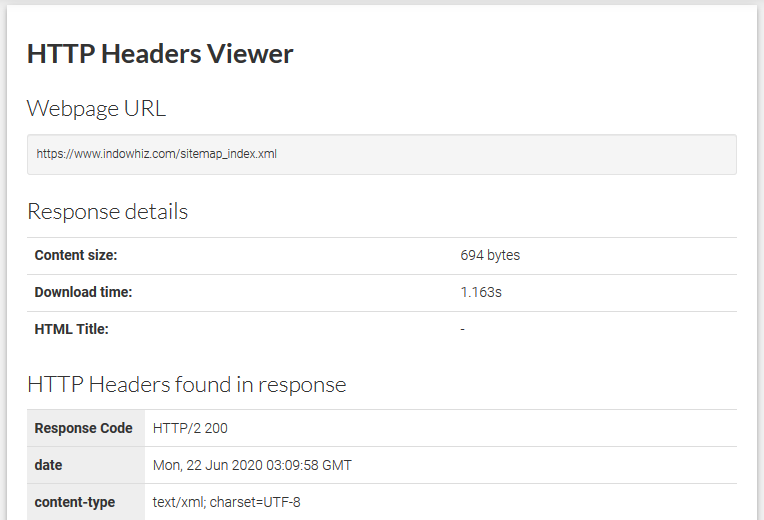

There are several ways to check whether a search engine bot could access and crawl your sitemap. You can try to use any websites that offer bot-checking services or use chrome by changing its user agent. Generally, these services are specifically for sitemaps in XML format. But it never hurts to try if you want to check any sitemap format other than XML.

A. Using the sitemap checker site

Many sites offer services to check the access to sitemap, such as:

- XML-Sitemaps.com (select

GoogleinUser Agent) - Google Robots.txt Tester

- Google URL Inspection Tool

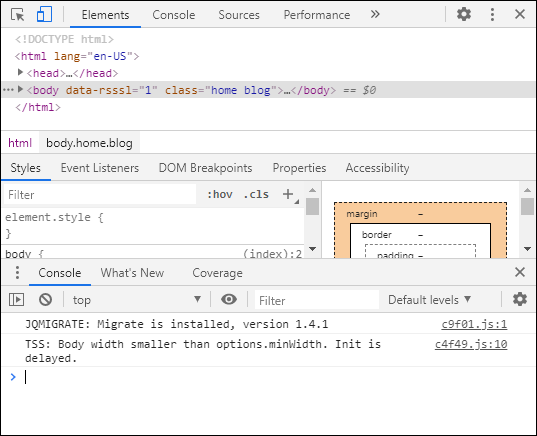

B. Using the Chrome browser

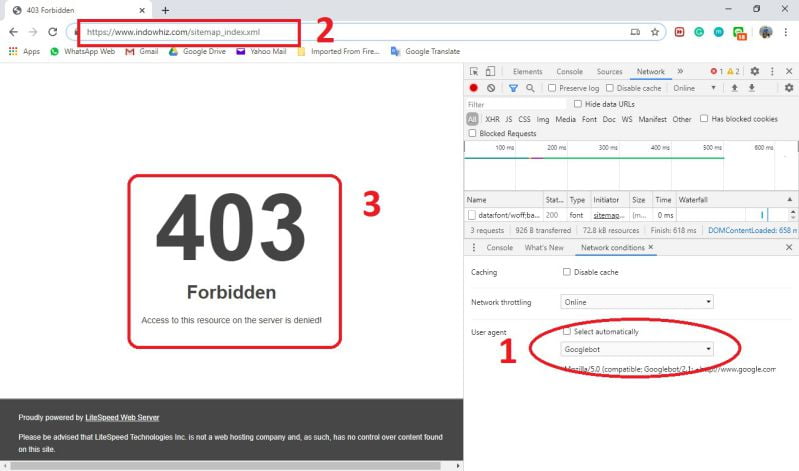

Alternatively, we can use the Chrome browser to check access to the sitemap. To this end, you could change the user agent to Googlebot, which is the Google’s main crawler [2].

First, open the developer tools as follows:

- on the Chrome browser, click the menu or

⋮button in the upper-right of your browser, - select

More tools>Developer tools.

Then you will see a screen similar to Figure 2 in your Chrome browser.

Second, change the user agent as follows:

- click

⋮button in the lower-left of developer tools (in the bottom menu near theConsoletab), - click

Network conditions, - in the

user agentbox, uncheck theSelect automatically, - then select

Googlebotfrom the dropdown option.

After changing the user agent, check whether you can access your sitemap, as illustrated in Figure 3.

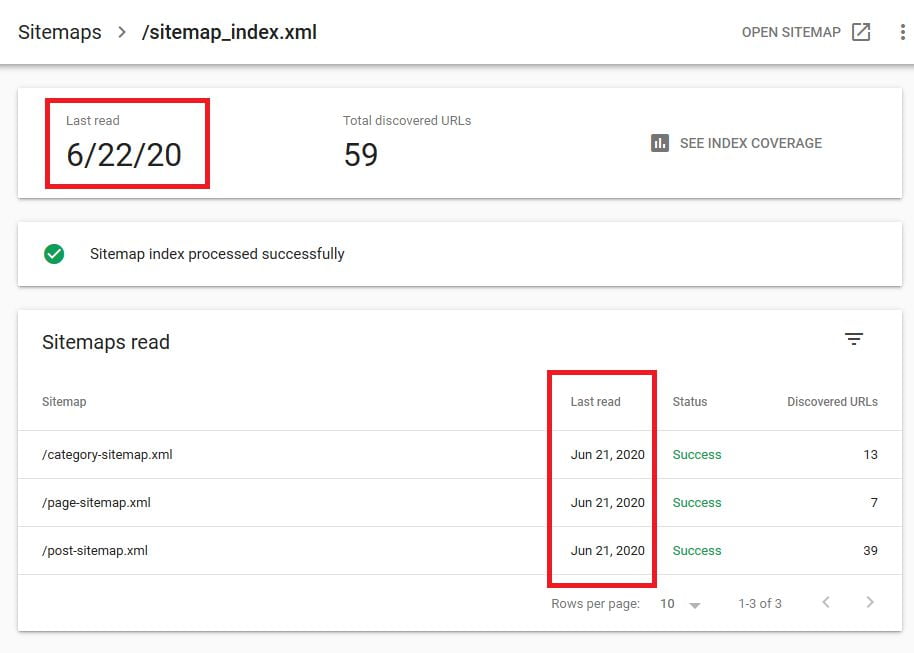

C. Waiting for the next crawling

You may have tried sections 1-A and 1-B above, and there are no problems accessing the sitemap. In that case, please wait for the search engine crawlers to read the sitemap in the next few days.

For example, resubmitting a sitemap to Google’s Webmaster Tool may show a “couldn't fetch” error. Yet, there is no problem with your sitemap access, as in subsections 1-A and 1-B above. In that case, wait for the next “last read” date, as Google reads the sitemap once in several days. Generally, there are no further problems after that.

2. Fixing access to the sitemap

If the search engine cannot access or read your sitemap (e.g., 404 or 403 error), some methods may be worth trying.

Before trying to solve the issue, create a complete backup of your website!

A. Make sure the sitemap address is correct

Before trying various technical things, try opening your sitemap URL directly in the browser. If you get a 404 error, there are several possibilities, including:

- Incorrect sitemap URL; try to double-check your sitemap URL.

- Your site failed to generate a sitemap; If you’re using a CMS (e.g., WordPress) and a sitemap generator plugin, there might be an issue with the sitemap plugin settings.

Note: if you use chrome and change the user agent as in Subsection 1-B above, then first reset the user agent by checking Select automatically.

B. Use only one sitemap generator

In the case of WordPress CMS, many people may install an SEO plugin (e.g., Yoast, RankMath) and a sitemap generator (e.g., Google XML Sitemaps). Because both of them may generate sitemaps independently, this may interfere with each other.

Therefore, make sure only one plugin is allowed to generate the sitemap. After that, re-check the access to the sitemap using the sitemap checker or Chrome, as in Section 1.

C. Check robot.txt and .htaccess files

Either robot.txt or .htaccess file can block crawlers (e.g., Googlebot) from reading the sitemap [3]. An example of robot.txt that blocks Googlebot [4]:

User-agent: Googlebot

Disallow: /The code above means that the Googlebot cannot access the entire site. If you want to allow Googlebot to access your site, delete these two consecutive lines.

In addition, check the .htaccess file for the following code (or similar):

RewriteEngine on

RewriteCond %{HTTP_USER_AGENT} Googlebot [OR]

RewriteCond %{HTTP_USER_AGENT} msnbot [OR]

RewriteCond %{HTTP_USER_AGENT} yandexbot

RewriteRule ^.*$ "https\:\/\/www\.indowhiz\.com" [R=301,L]If it exists, try to delete it temporarily. Then, re-check the access to the sitemap using the sitemap checker or Chrome, as in Section 1.

D. Check CDN settings

Many well-known sites, including Google Cloud CDN, AWS, Cloudflare, and QUIC.cloud, offer Content Delivery Network (CDN) services. Sometimes some problems occur due to cache, firewall, or settings on the CDN.

To check whether you have a CDN issue, try disabling the CDN. Then, re-check the access to the sitemap using the sitemap checker or Chrome, as in Section 1. There are two possibilities after disabling the CDN:

- The sitemap is accessible. It means that the CDN causes the issue, which blocks access to the sitemap. You may need to verify and adjust your cache, firewall, and other settings that may cause the issue. If you have trouble adjusting them, try asking for some help from the CDN provider.

- The sitemap is not yet accessible. It means that your CDN may or may not cause the issue. Because there is a possibility that not only the CDN is causing the issue, we can not be sure that your CDN settings are fine. In this case, we suggest you keep the CDN disabled until you solve the access issue using other methods.

E. Check CMS security plugins

Use of CMS (e.g., WordPress), usually along with a security plugin (e.g., Wordfence, Sucuri, or iThemes). Yet, this is a double-edged sword for website administrators. Security plugins can be helpful but can also be annoying.

To check whether or not the CMS security plugin causes the issue, try disabling it. Then, re-check the access to the sitemap using the sitemap checker or Chrome, as in Section 1. There are two possibilities after disabling the CMS security plugin:

- The sitemap is accessible. It means that the CMS security plugin causes the issue, which blocks access to the sitemap. You may need to verify and adjust the settings of the security plugin. If you have trouble adjusting them, try asking for some help from the plugin’s developer.

- The sitemap is not yet accessible. The CMS security plugin may or may not cause the issue. There is a possibility that not only the CMS security plugin causes the problem. We can not be sure that your CMS security plugin settings are fine. In this case, we suggest you keep the CMS security plugin disabled until you solve the access issue using other methods.

F. Check WAF settings

Use of a web control panel (e.g., CPanel, Plesk, or WHM), usually along with a Web Application Firewall (WAF) (e.g., ModSecurity or Imunify360). In rare cases, WAF such as ModSecurity blocks bot access (even Googlebot) because it is considered spam or a dangerous bot.

To check whether or not the WAF settings cause the issue, try disabling it. Then, re-check the access to the sitemap using the sitemap checker or Chrome, as in Section 1.

If we can access the sitemap after disabling WAF, it means the WAF settings cause the issue, which blocks access to the sitemap. You may need to verify and adjust the WAF settings. If you have trouble adjusting them, try asking for some help from your hosting provider.

Generally, CDN offers online protection through its WAF. Therefore, check whether your CDN has WAF. If the CDN offers WAF to you, it is safe to disable WAF on your server.

G. Ask for help

Maybe you have tried all of the methods above but to no avail. So, you may need help from your hosting provider to solve the issue. Alternatively, you can ask a professional to solve your problem.

References

- [1]Google, “Manage your sitemaps: Sitemaps report,” Search Console Help. https://support.google.com/webmasters/answer/7451001?hl=en (accessed Jun. 19, 2020).

- [2]Google, “Overview of Google crawlers (user agents),” Search Console Help. https://support.google.com/webmasters/answer/1061943?hl=en (accessed Jun. 19, 2020).

- [3]A. Gent, “How to Check XML Sitemaps are Valid,” DeepCrawl, Apr. 10, 2019. https://www.deepcrawl.com/knowledge/guides/check-xml-sitemaps-are-valid/ (accessed Jun. 19, 2020).

- [4]Remiz, “Block Google and bots using htaccess and robots.txt,” HTML Remix, May 03, 2011. https://www.htmlremix.com/seo/block-google-and-bots-using-htaccess-and-robots-txt (accessed Jun. 19, 2020).